Your Google rankings look fine.

Yet your brand never shows up in ChatGPT answers.

That’s not “bad SEO.” It’s a different failure mode.

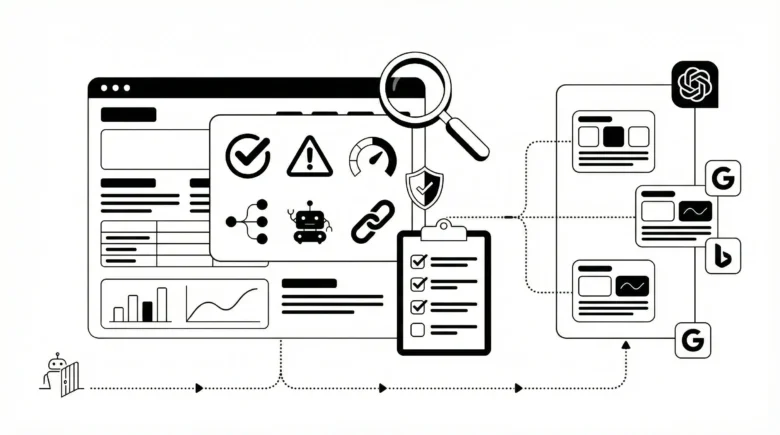

ChatGPT “ranking” is mostly about eligibility + extractability + citation-worthiness—and you can audit all three, end to end. ChatGPT Search also shows sources and links, so your site either gets pulled in… or ignored.

Key Takeaways (print this)

- Don’t block OAI-SearchBot if you want to appear in ChatGPT Search answers.

- Make content easy to extract: clean HTML, clear headings, short answer blocks, visible tables.

- Win citations with proof: add stats + credible sourcing; keyword stuffing doesn’t help.

- Measure like a product: track

utm_source=chatgpt.comand prompt-level share-of-voice.

First: what “ranking in ChatGPT” actually means

ChatGPT has multiple ways of “touching” your site.

Treat them like separate audit targets.

| Surface | What it does | Bot / mechanism you’ll see | What can block it |

|---|---|---|---|

| ChatGPT Search answers | Cites + links sources | OAI-SearchBot (search indexing) | robots.txt for OAI-SearchBot |

| Training influence | Long-term model learning | GPTBot (training crawl) | robots.txt for GPTBot |

| User-triggered fetch | “Open this page” actions | ChatGPT-User | robots.txt may not apply; use access controls/noindex |

Here’s the punchline.

You can be “SEO-visible” and still be “ChatGPT-invisible.”

One study found 87% of SearchGPT citations matched Bing’s top organic results, which means Bing visibility (and basic crawl hygiene) is still a major lever.

But it’s not only rank.

ChatGPT can cite certain aggregators and “easy-to-use” sources even when they aren’t top-ranked.

Step 1 — Pass the eligibility gate (so ChatGPT can actually reach you)

If you fail here, nothing else matters.

Not your content. Not your backlinks.

1) Robots.txt: allow OAI-SearchBot (and decide what to do with GPTBot)

OpenAI is explicit: OAI-SearchBot is used to surface websites in ChatGPT’s search features, and sites opted out won’t be shown in search answers.

Also useful: OpenAI notes it can take ~24 hours for robots.txt updates to take effect.

Minimal example (allow search, block training):

User-agent: OAI-SearchBot

Allow: /

User-agent: GPTBot

Disallow: /

That setup is common for publishers who want traffic without model-training reuse.

Audit checks

- Fetch

/robots.txtand confirm the rules exist for OAI-SearchBot. - Confirm OAI-SearchBot isn’t blocked by a CDN/WAF allowlist mistake (OpenAI publishes IP ranges).

- Keep robots.txt syntax clean (rules must sit under a user-agent group).

2) Indexability: don’t “accidentally disappear”

ChatGPT Search can surface and cite public pages, but your technical signals still matter: status codes, canonicals, redirects, and thin duplicates.

And this part surprises people: OpenAI’s publisher FAQ says that if a page is disallowed but the URL is discovered elsewhere, ChatGPT may still surface a link and title—and if you don’t want even that, use noindex (but allow crawling so it can be read).

Audit checks

- No mixed signals:

noindex+ indexable canonicals is a mess. - No infinite redirect chains.

- 200 status for your “money” pages.

3) Bing visibility isn’t optional

If ChatGPT search citations skew heavily toward what Bing already ranks, your audit must include Bing indexing, not just Google Search Console.

Do this

- Confirm your key URLs are indexed in Bing.

- Fix crawl traps and duplicate parameter pages that waste crawl capacity.

Step 2 — Make your content extractable (so it can be quoted cleanly)

ChatGPT doesn’t “admire” your design.

It extracts meaning.

Generative visibility depends on whether your page can be retrieved and correctly interpreted—not only whether it ranks.

The extractability checklist (brutal, but accurate)

If you only do one audit step, do this one.

- View-source shows the main content (not just a JS shell).

- One H1. Clear H2/H3 hierarchy.

- Short answer blocks near the top (2–5 sentences).

- Tables for comparisons (real

<table>tags, not screenshots). - Semantic HTML:

<article>,<nav>,<main>,<header>. - No “content behind interactions only” (tabs/accordions that never render server-side).

- Stable URLs (don’t rotate IDs per session).

- Clear author + updated date on-page (and consistent with schema).

- Internal links that point to “next best answer,” not just “related posts.”

- Fast first render (we’ll benchmark this in Step 4).

And one more that’s new-school.

Accessibility is now an AI feature.

OpenAI says ChatGPT Agent in Atlas uses ARIA tags to interpret page structure and interactive elements, and recommends following WAI-ARIA best practices for better compatibility.

Step 3 — Win citations (audit for proof, entities, and uniqueness)

This is where most sites lose.

They sound correct, but they aren’t useful enough to cite.

A major GEO research paper found the best content tweaks improved visibility metrics by ~41% (position-adjusted word count) and ~28% (subjective impression), while keyword stuffing didn’t perform well.

So your audit should grade pages on three “citation triggers.”

1) Evidence density (numbers beat adjectives)

Add hard data.

Not vibes.

Audit targets (per 1,000 words)

- 3–7 concrete numbers (benchmarks, ranges, rates, thresholds).

- At least 2 outbound citations to reputable sources (standards bodies, docs, primary research).

- 1 mini “how to measure this” snippet (tool + metric + threshold).

This aligns with what worked best in GEO experiments: statistics + citations + quotes.

2) Entity clarity (make it impossible to misunderstand you)

ChatGPT and other generative engines reward content that is clearly structured and easy to parse, with strong entity definition and trust signals.

Audit checks

- Consistent naming: product names, features, people, locations.

- Organization schema + author schema where relevant.

- “What it is / who it’s for / when to use it” appears early.

Structured data won’t guarantee anything, but JSON-LD is the recommended format in Google’s docs and helps machines interpret your page.

3) “Information gain” (say something others don’t)

If your page is a remix, you’ll be skipped.

Fast.

Your audit should flag pages with:

- identical subheads to top-ranking competitors

- no proprietary examples

- no original frameworks

- no “failure modes” section

That’s why Vercel’s LLM SEO guidance leans hard on depth, structure, and originality—and calls out that keyword stuffing muddies the signal.

Step 4 — Speed still matters (and the thresholds are clear)

Slow pages don’t get read.

They get abandoned.

Core Web Vitals targets you can audit today:

- LCP ≤ 2.5s

- INP ≤ 200ms

- CLS < 0.1

And Google’s Search Console report groups URLs by “Good / Needs improvement / Poor” using field data.

| Metric | “Good” target | Where to pull it | What to fix first |

|---|---|---|---|

| LCP | ≤ 2.5s | Search Console + CrUX | image/font bloat, SSR/HTML size |

| INP | ≤ 200ms | Search Console + RUM | heavy JS, long tasks, third-party scripts |

| CLS | < 0.1 | Search Console + lab tests | late-loading banners, image dimensions |

Step 5 — Instrument the audit (so you can prove it worked)

If you can’t measure it, you’ll guess.

Guessing is expensive.

OpenAI’s publisher FAQ says ChatGPT includes utm_source=chatgpt.com in referral URLs, which makes AI traffic trackable in analytics.

Build a simple “ChatGPT Visibility Score”

Use a 0–100 score you can update weekly.

- Eligibility (30): OAI-SearchBot allowed, indexable, no crawl blocks

- Extractability (30): clean HTML, headings, answer blocks, semantic structure

- Citeworthiness (30): stats + sources + entity clarity + unique sections

- Tracking (10): UTM reporting + prompt set monitored

Then add a KPI that aligns with how generative answers behave:

Citation Share-of-Voice (cSOV)(# prompts where your domain is cited) / (total prompts tested)

This mirrors the “citation economy” logic behind GEO: presence inside answers beats position #3 on a SERP.

Step 6 — Where LLMic fits (smoothly, without the sales pitch)

Most teams try to solve this with spreadsheets.

That breaks at page 50.

LLMic positions itself as a native macOS crawler for AI Search, built to audit websites for hallucination risk and token economy constraints, and to validate llms.txt—without cloud subscriptions or per-page limits.

So here’s a clean way to use it in your audit loop:

- Crawl the site and flag pages likely to be misread or misquoted.

- Fix eligibility and extraction blockers (robots, HTML structure, ARIA labels).

- Add evidence blocks (stats + citations) to priority pages.

- Re-crawl and compare before/after scores.

- Watch

utm_source=chatgpt.comand cSOV weekly.

It’s not magic.

It’s discipline—made faster.

Information Gain Table: Traditional SEO Audit vs ChatGPT Audit

| Audit dimension | Traditional SEO question | ChatGPT visibility question | Pass/Fail metric | What fails most often |

|---|---|---|---|---|

| Crawl access | Can Googlebot crawl? | Can OAI-SearchBot crawl? | robots allows OAI-SearchBot | blanket “block AI bots” rule |

| Indexing | Is it indexed? | Is it discoverable and citable? | appears in Bing + has clean canonicals | dupes + messy canonicals |

| Page structure | Is content “good”? | Is content extractable in chunks? | headings + answer blocks exist | JS-only rendering |

| Authority | Backlinks + E-E-A-T | “Safe to cite” signals (proof + clarity) | stats + sources per 1k words | unsupported claims |

| Performance | CWV improves rank | CWV improves completion + extraction | LCP/INP/CLS “Good” | third-party script drag |

| Measurement | Rankings + traffic | cSOV + utm_source=chatgpt.com | tracked prompts + AI referrals | no prompt set, no baseline |

FAQs

Can I “rank #1 in ChatGPT” like Google?

Not really—your goal is to be retrieved and cited inside answers, which is closer to share-of-voice than a single rank.

If I block GPTBot, will I disappear from ChatGPT Search?

No—GPTBot controls training crawl; ChatGPT Search visibility depends on OAI-SearchBot access.

What’s the fastest win in a ChatGPT audit?

Fix bot access first, then add stats + citations to your top pages; those tactics showed strong gains in GEO experiments.