AI Hallucinations are incorrect or misleading results given by the AI models.

It happens when the model doesn’t have sufficient data to train it properly.

AI hallucinations become problematic when we talk about the YMYL niche. For example, Healthcare, Finance etc.

Why do AI Hallucinations Occur?

We know that AI models are trained on a set of data. Based on these data, AI models make predictions.

Hence, the more data the model has, the better prediction it can make.

So, everything depends on the training data.

For example, if we train the AI model with the images of all cancerous cells then it can easily identify a cancer cell but it may call a healthy cell also as a cancerous cell.

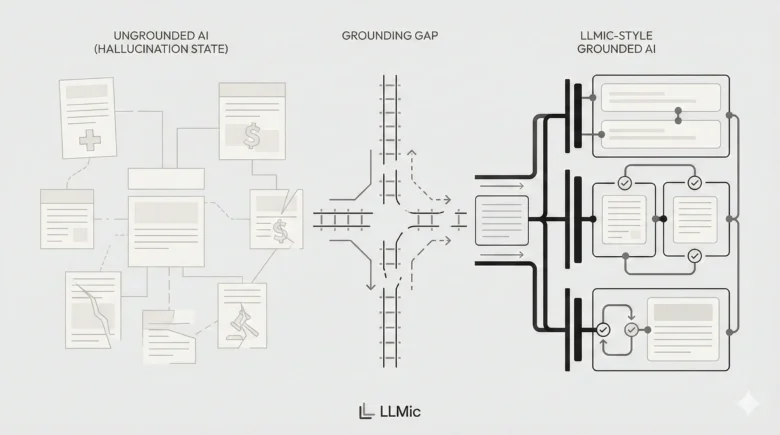

Another reason that may let the AI to hallucinate is Proper Grounding.

Suppose you created an AI model to generate the Title and Meta Description of an article. Now, if the proper grounding is not set up, then the AI model may give you Title and Meta Description that contains the information which is not present in the article itself. This is also one form of hallucination.

Let’s talk about the different types of hallucination an AI can give you.

Types of AI Hallucinations

Primarily, there are three types of AI Hallucinations which is mentioned below:

- Incorrect Predictions: An AI model may predict an incorrect event. Suppose, you created an AI model to predict the weather data. Then, it may happen that it doesn’t predict the correct weather for tomorrow.

- False Positive: An AI model can also give you false positive data. For example, suppose you created an AI model to detect fraud transactions, then it may happen that it marks a good transaction also as a fraud transaction.

- False Negative: Like I explained in above example, it may detect a healthy cell also as a cancerous cell.

Now, let’s take a look at the prevention technique for AI hallucinations.

How to Prevent AI Hallucination?

Limiting the Possible Outcomes

When you train the AI model make sure to limit the possible outcomes. Now, you can limit the possible outcomes with a technique called Regularization. Through Regularization, you will penalize the AI models if it gives you out of the box data.

Providing Relevant Training Data

When you train the AI model, make sure you provide it with the complete information regarding its goal. For example, if you train an AI model on cancerous cell data then make sure to train it to a complete data set of both cancerous and non-cancerous cells.

Make sure the training data is factual and unbiased.

Templatization

It is a good practice to input data and take the output data from AI models in a templatized format. This way it doesn’t provide you the output by hallucinating and also understand your brief better.

Setting a Feedback Mechanism

AI models always have a limited set of data on which it is trained. It is better to give them feedback on the output they are providing. This way they give you the desired result.