LLMic Features

AI-First SEO Auditing for the New Search Era

Designed for

LLMic is built for how AI systems read, understand, and cite content. It analyzes real HTML, semantic structure, and machine trust signals – so your website becomes usable inside AI search engines, not just searchable.

Why Traditional SEO Tools Are No Longer Enough?

- Search engines no longer only rank pages – they summarize, rewrite, and cite them.

- Most SEO tools still audit for blue-link ranking signals.

- LLMic audits whether your content can survive inside AI systems that operate on tokens, entities, and contextual trust.

LLMic Core Features

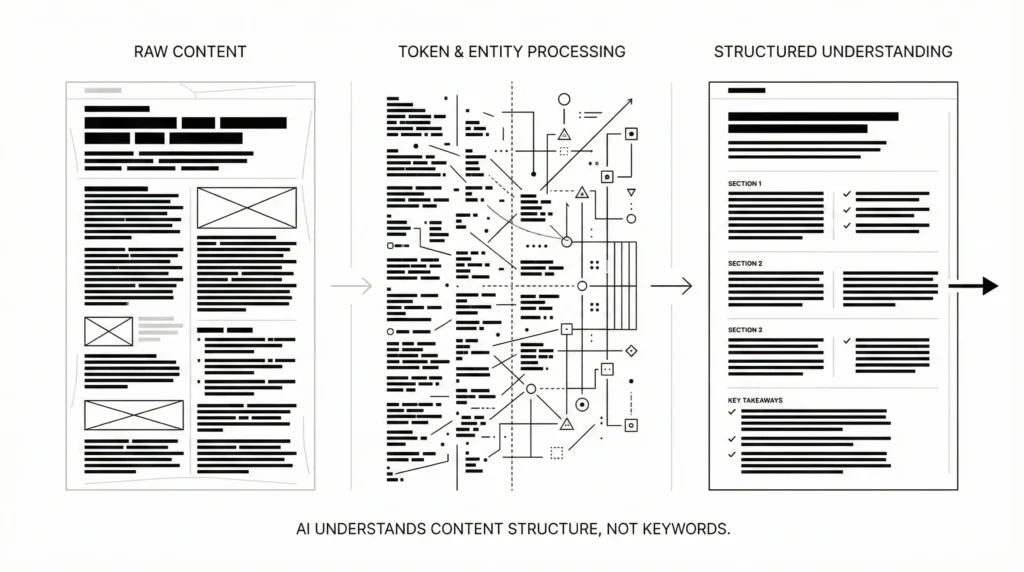

AI-Native Crawling (Local & Private)

- LLMic crawls websites locally on your machine, exactly as AI systems read them.

- Nothing is uploaded, cached, or processed in the cloud.

- HTML-first crawling without JavaScript dependency

- Robots.txt-aware crawling

- Single URL, URL list, and full-site scans

Why it Matters?

If AI cannot access your content directly from HTML,

it will not trust or reuse it in generated answers.

What AI sees is what LLMic audits.

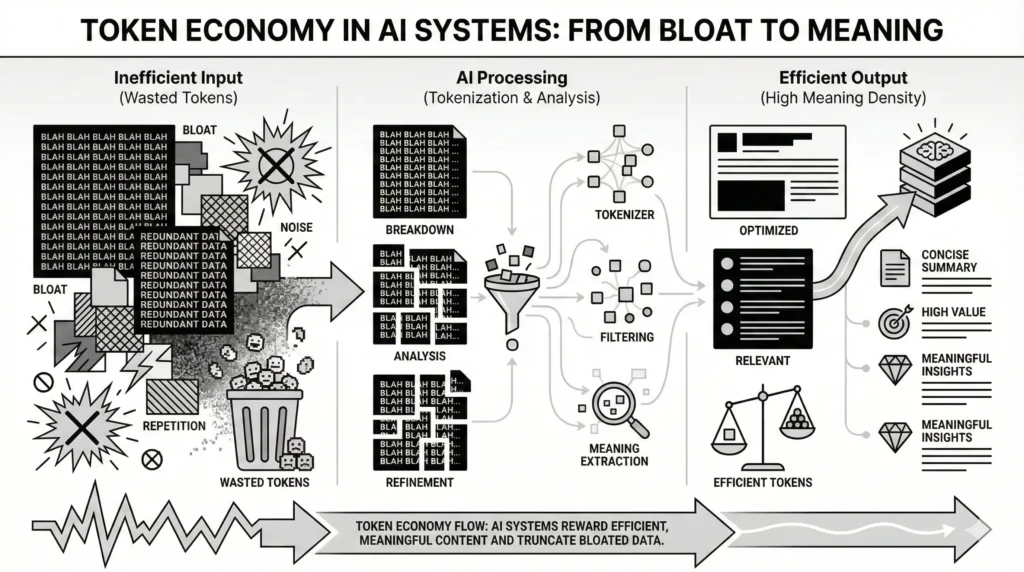

Token Economy Analysis

- AI systems operate under strict token limits.

- LLMic measures how efficiently your content converts tokens into meaning.

- Boilerplate and navigation repetition

- Content redundancy

- Token-to-entity ratio

- Meaningful vs wasted tokens

Why it Matters?

- Efficient pages are fully ingested.

- Bloated pages are truncated or ignored.

Search is no longer limited to rankings and blue links.

Modern AI systems like ChatGPT, Google AI Overviews, Gemini, and Perplexity do not simply index pages. They read, compress, summarize, and reuse content.

LLMic is built to audit websites for this new reality.

Instead of focusing only on rankings, LLMic evaluates whether your content can be:

- Read directly from HTML

- Understood with limited token budgets

- Trusted through structure and entities

- Reused safely in AI-generated answers

This makes LLMic an AI-first SEO auditing platform, not a traditional SEO checker.

AI-Native Crawling: Auditing What AI Can Actually See

Most SEO tools rely on cloud crawlers, JavaScript rendering, or cached versions of pages. AI systems do not.

LLMic crawls websites locally, using an HTML-first approach that mirrors how AI systems access content.

What AI-Native Crawling Covers

- Direct HTML access without JavaScript execution

- robots.txt-aware crawling

- Clean extraction of visible, readable content

- Support for single pages, URL lists, and full websites

This ensures that what AI sees is exactly what LLMic audits.

Why This Matters for AI Search

If AI cannot access your content directly from HTML:

- It cannot fully ingest the page

- It cannot trust the information

- It will not reuse the content in answers

AI-native crawling removes false assumptions and reveals real visibility gaps.

Token Economy Analysis: Measuring Content Efficiency for AI

AI systems operate under strict token limits.

Long, repetitive, or boilerplate-heavy pages are often truncated or ignored.

LLMic analyzes how efficiently your content converts tokens into meaning.

Signals Included in Token Economy Analysis

- Boilerplate and navigation repetition

- Redundant paragraphs and headings

- Token-to-entity ratio

- Meaningful vs wasted tokens

Why Token Efficiency Impacts AI Visibility

Efficient pages:

- Are fully ingested

- Retain context

- Produce accurate summaries

Bloated pages:

- Lose critical sections

- Get partially summarized

- Are less likely to be cited

“AI does not reward length. It rewards clarity per token.”

Speakability and AI Readability Scoring

AI systems prefer content that sounds natural when read aloud or summarized.

LLMic evaluates speakability, which measures how easily AI can convert your content into fluent language.

Speakability Factors Measured

- Sentence length balance

- Active vs passive voice

- Conversational phrasing

- Clear question-answer structures

- One-idea-per-paragraph formatting

Content that is easier to speak is also easier to summarize, quote, and reuse.

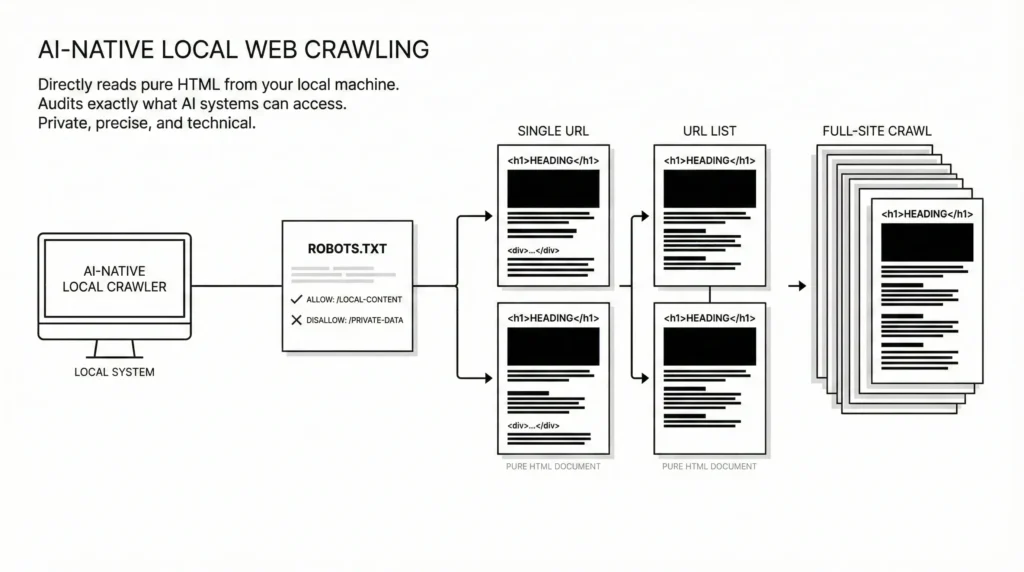

Entity Extraction and Semantic Understanding

AI systems understand topics through entities, not keywords.

LLMic extracts and evaluates:

- Primary topic entities

- Supporting contextual entities

- Missing entities that weaken topical authority

This helps identify:

- Under-explained topics

- Over-optimized content

- Semantic gaps compared to competitors

Strong entity coverage improves trust and reduces hallucination risk.

Schema and Machine Trust Signals

Schema is a trust layer for machines.

LLMic audits structured data to ensure:

- Correct schema types are present

- Content matches structured markup

- No conflicting or misleading schema exists

Well-implemented schema helps AI systems:

- Verify facts

- Attribute sources

- Reduce uncertainty

Feature Coverage Overview

| Feature Area | What LLMic Measures | AI Impact |

|---|---|---|

| AI-Native Crawling | HTML accessibility, robots.txt | Prevents hidden content |

| Token Economy | Efficiency per token | Improves ingestion |

| Speakability | Natural language flow | Better summaries |

| Entity Analysis | Topic grounding | Higher trust |

| Schema Audits | Machine verification | Reduced hallucination |

| Retrieval Readiness | Structural clarity | Higher reuse |

Traditional SEO Tools vs LLMic

| Aspect | Traditional SEO Tools | LLMic |

|---|---|---|

| Focus | Rankings | Understanding |

| Crawling | Cloud-based | Local, HTML-first |

| Metrics | Keywords, links | Tokens, entities |

| AI Readiness | Limited | Core design |

| Output | Scores | Actionable AI fixes |

Who Should Use LLMic Features

LLMic is designed for:

- SEO professionals preparing for AI-driven search

- Content teams optimizing for AI summaries

- Publishers and SaaS websites

- Agencies offering AI readiness audits

- Founders building future-proof content strategies

If AI systems matter to your traffic, LLMic features matter to your website.

Why LLMic Is Built Differently

LLMic is not a checklist tool.

It is a content comprehension debugger.

SEO helps pages rank.

LLMic helps pages be understood, trusted, and reused by AI systems.

That difference defines the future of search.